[Leetcode 334] Increasing Triplet Subsequence

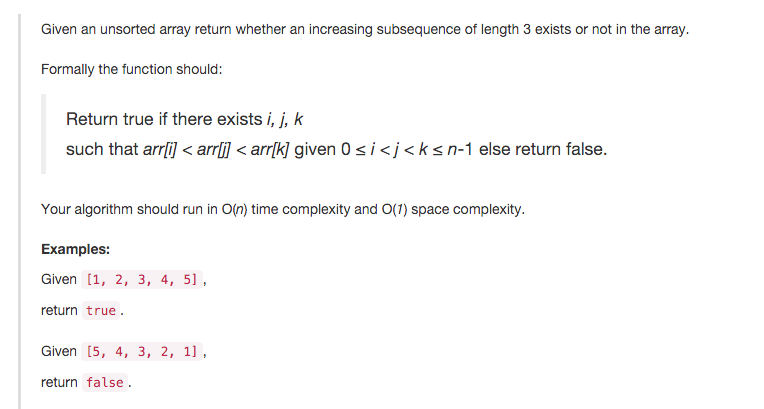

Given an array, return whether an increasing subsequence of length 3 exists or not in the array, i.e., given array , if there exist such that , return true, otherwise, return false. More details you can refer to leetcode 334.

Solutions

Brute Force

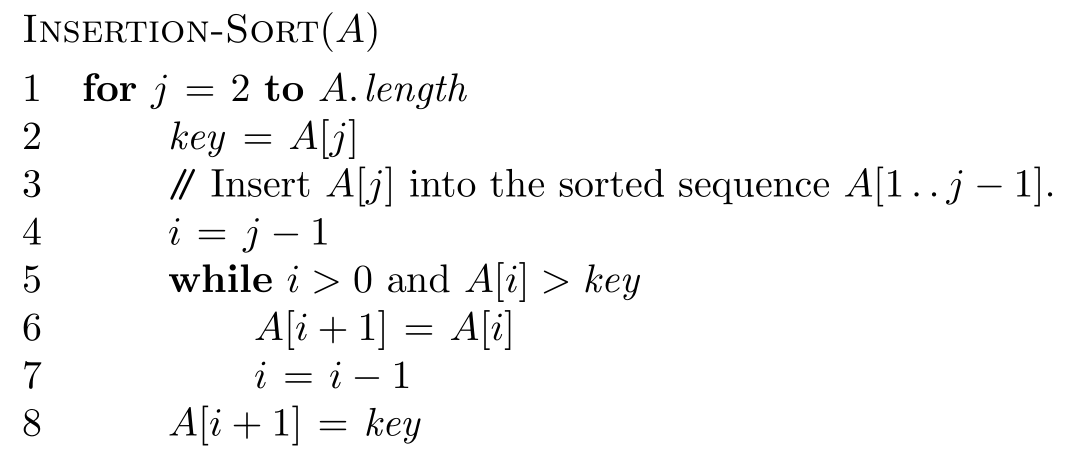

The easiest idea would be the brute force. It works as follows. For every , check whether there exists and such that and . The following gives its pseudo code.

n = len(A)

for k in xrange(1,n - 1):

f = False

for i in range(k):

if A[i] < A[k]:

f = True

break

if f:

for j in xrange(k + 1,n):

if A[j] > A[k]:

return True

return False

It is obvious, the above algorithm has time complexity of and space complexity of .

Small Improvement

In the brute force algorithm, if you do some preprocessing, you can easily avoid the inner for loop, which means you can reduce the time complexity to . How does the preprocessing work? Actually it is quite easy. Imagine that if you have known the minimum number (denoting as ) before and the maximum number (denoting as ) after , then you only need to check whether . And of course the preprocessing is to calculate and . Its pseudo code is shown in the following.

n = len(A)

T = [A[0]]

Q = [A[-1]]

for k in xrange(1,n):

T.append(min(T[-1],A[k - 1]))

Q.insert(max(Q[0],A[n - k - 1]))

for k in xrange(1,n-1):

if A[k] > T[k] and A[k] < Q[k]:

return True

return False

It is easy to figure out that this algorithm has time complexity of , however, it also has space complexity of .

Even Better

Can we do better? Yes, of course, first of all, we can at least reduce the space from to (e.g., replacing array with an integer number), however, the space complexity is still , just with a smaller constant factor. It seems that based on the previous idea (i.e., through comparing the minimum number before an element and the maximum one after it), we can hardly reduce the space requirement further.

Let’s change our though a little bit. Imagine if you know the “second order minimum element”, here the “second order minimum element” is the minimum element which has at least one element before it and less than it, mathematically, . Then, through comparing with it, we can get whether there is an increasing triplet subsequence in . Since, for any , we only need to know the “second order minimum element” in , so we do not need preprocessing, and if we can update the “second order minimum element” in space and time when we go from to , then we can design an algorithm with time complexity of and space complexity of .

Now, let’s focus on the key question: can we update the “second order minimum element” in space and time when we go from to ? Assume is the “second order minimum element” in , if , then we are done, because it means there exists an an increasing triplet subsequence in . However, things get tricky when . We cannot directly update as , since we can not guarantee there exists at least an element that is less than in . Go one step further, if we have the record of the minimum element (denoting as ) in , then if , then is also the “second order minimum element” in , and if , then we can guarantee that there exists at least one element which is is less than , therefore, becomes the “second order minimum element”. Therefore, we can update the “second order minimum element” in space and time when we go from to .

The following shows the pseudo code.

n = len(A)

x = sys.maxint

y = sys.maxint

for k in range(n):

if A[k] > y:

return True

elif A[k] <= x:

x = A[k]

else:

y = A[k]

return False